Oftentimes, I am asked why I disapprove of static linking. The time has come for me to write an article about this issue and to propose a solution. Unfortunately, the great majority of programmers understand what static linking does and/or how it is used, but not its goals. In fact, people generally tend to take things for granted. Given that we live in an imperfect world, I would postulate that it is impossible to become a good programmer without being a freethinker as well.

That said, let's start by listing static linking's merits:

That said, let's start by listing static linking's merits:

- It comes to the aid of build systems, helping them improve build times by avoiding unnecessary recompilations when the leaf dependencies of executable or shared object files have remained unchanged. (This also applies to relocatable files, but they invariably are mere mediators—including them on the list of virtues would result in circular logic.)

- When a common ABI (application binary interface) is employed, linking statically makes it possible for programs written in one language to execute code written in another without OS (operating system) intervention.

- It allows vendors to distribute closed-source libraries in the form of object files.

The former two points are of a technical nature, while the latter is of a political one. I will address them in the same order and then provide some extra criticism.

For those of you unfamiliar with the usual building process, here's a quick recap. In order to build a target, all of its dependencies must be up-to-date. If they are not, they, too, become targets—it's a recursive procedure on a DAG (directed acyclic graph), called chaining. Finally, to actually build a target, a set of rules must be executed. So far, so good.

The foremost problem arises from the fact that starting at the translation unit level means we get a coarse-grained chain. Imagine changing qux.c in the above diagram, in a way that doesn't affect qux.o, such as adding a comment or modifying a routine qux.o doesn't call. As it can be seen, this would result in four extraneous targets—again, a recursive drill. For very large projects, build times can go through the roof because of this.

Regarding this particular argument, I would like to point out that it's really the design of current build systems that is fundamentally flawed; static linking just happens to be the building block that facilitates the problem because object file time stamps alone do not hold any information regarding what was modified in a translation unit and what wasn't, nor about what in their dependencies targets rely upon.

At first glance, using static linking in order to connect code written in different languages seems like the way to go. Dynamic linking would be a workaround, while compiler-specific extensions would result in loss of portability. Although I have nothing to complain about regarding this specific practice, the penultimate paragraph discusses an issue that encompasses it.

While I may not be a huge fan of making sacrificial technical decisions for political gains, I recognize that different people have different needs. What would it take for me to accept static linking as a solution to the closed-source library distribution problem? Well, it would have to be the only realistic approach—it obviously isn't. Evidently, dynamic linking works just as well for libraries, if not better given its benefits in loading overhead and memory footprint.

Something else that static linking will screw up is micro-optimization, as this is much more difficult to get right at this stage in the building process. In particular, it will get in the way of the compiler deciding what should be inlined and what should not. Furthermore, because calling conventions are so rigid, it will also bungle register and/or stack allocation across object file boundaries.

What I advocate is whole-program compilation by compilers with translation caches; this would integrate the build system more tightly with the compiler, meaning better granularity in correctly choosing dependencies. One benefit is that, for clean builds, this strategy is capable of whole-program optimization. As for other builds, the amount of non-incremental compilation, which would likely result in more optimal code, can be constrained by the preferred compilation time; this should be particularly useful in JIT compilers, which could combine this idea with runtime profiling. If multiple languages are required, the ABI could define an IR (intermediate representation), perhaps bytecode.

For those of you unfamiliar with the usual building process, here's a quick recap. In order to build a target, all of its dependencies must be up-to-date. If they are not, they, too, become targets—it's a recursive procedure on a DAG (directed acyclic graph), called chaining. Finally, to actually build a target, a set of rules must be executed. So far, so good.

|

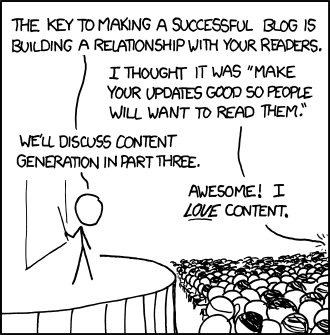

| A change in qux.c propagates throughout the DAG. |

|

| Courtesy of Randall Munroe. |

At first glance, using static linking in order to connect code written in different languages seems like the way to go. Dynamic linking would be a workaround, while compiler-specific extensions would result in loss of portability. Although I have nothing to complain about regarding this specific practice, the penultimate paragraph discusses an issue that encompasses it.

While I may not be a huge fan of making sacrificial technical decisions for political gains, I recognize that different people have different needs. What would it take for me to accept static linking as a solution to the closed-source library distribution problem? Well, it would have to be the only realistic approach—it obviously isn't. Evidently, dynamic linking works just as well for libraries, if not better given its benefits in loading overhead and memory footprint.

Something else that static linking will screw up is micro-optimization, as this is much more difficult to get right at this stage in the building process. In particular, it will get in the way of the compiler deciding what should be inlined and what should not. Furthermore, because calling conventions are so rigid, it will also bungle register and/or stack allocation across object file boundaries.

What I advocate is whole-program compilation by compilers with translation caches; this would integrate the build system more tightly with the compiler, meaning better granularity in correctly choosing dependencies. One benefit is that, for clean builds, this strategy is capable of whole-program optimization. As for other builds, the amount of non-incremental compilation, which would likely result in more optimal code, can be constrained by the preferred compilation time; this should be particularly useful in JIT compilers, which could combine this idea with runtime profiling. If multiple languages are required, the ABI could define an IR (intermediate representation), perhaps bytecode.